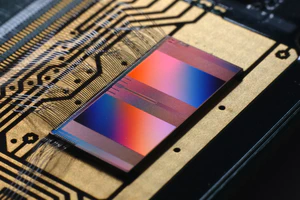

The BrainScaleS-2 is an accelerated spiking neuromorphic system-on-chip integrating 512 adaptive integrate-and-fire neurons, 131k plastic synapses, embedded processors, and event routing. It enables fast emulation of complex neural dynamics and exploration of synaptic plasticity rules. The architecture supports training of deep spiking and non-spiking neural networks using hybrid techniques like surrogate gradients.

Developed By:

The BrainScaleS-2 accelerated neuromorphic system is an integrated circuit architecture for emulating biologically-inspired spiking neural networks. It was developed by researchers at the Heidelberg University and collaborators. Key features of the BrainScaleS-2 system include:

System Architecture

- Single-chip ASIC integrating a custom analog core with 512 neuron circuits, 131k plastic synapses, analog parameter storage, embedded processors for digital control and plasticity, and an event routing network

- Processor cores run a software stack with a C++ compiler and support hybrid spiking and non-spiking neural network execution

- Capable as a unit of scale for larger multi-chip or wafer-scale systems

Neural and Synapse Circuits

- Implements the Adaptive Exponential Integrate-and-Fire (AdEx) neuron model with individually configurable model parameters

- Supports advanced neuron features like multi-compartments and structured neurons

- On-chip synapse correlation and plasticity measurement enable programmable spike-timing dependent plasticity

Hybrid Plasticity Processing

- Digital control processors allow flexible implementation of plasticity rules bridging multiple timescales

- Massively parallel readout of analog observables enables gradient-based and surrogate gradient optimization approaches

Applications and Experiments

- Accelerated (1,000-fold compared to biological real time) emulation of complex spiking neural network dynamics, including configurable multi-compartmental cell morphologies

- Exploration of synaptic plasticity models and critical network dynamics at biological timescales

- Training of deep spiking neural networks using surrogate and exact gradient techniques

- Non-spiking neural network execution leveraging synaptic crossbar for analog matrix multiplication

- Available via three different software frameworks:

- jaxsnn, a JAX-based framework for event-based numerical simulation of SNNs

- hxtorch, a PyTorch-based deep learning Python library for SNNs

- PyNN.brainscales2, an implementation of the PyNN API

The accelerated operation and flexible architecture facilitate applications in computational neuroscience research and novel machine learning approaches. The system design serves as a scalable basis for future large-scale neuromorphic computing platforms.

Related publications

| Date | Title | Authors | Venue/Source |

|---|---|---|---|

| April 2024 | jaxsnn: Event-driven gradient estimation for analog neuromorphic hardware | Eric Müller, Moritz Althaus, Elias Arnold, Philipp Spilger, Christian Pehle, Johannes Schemmel | 2024 Neuro-Inspired Computational Elements Conference (NICE) |

| April 2023 | hxtorch.snn: Machine-learning-inspired Spiking Neural Network Modeling on BrainScaleS-2 | Philipp Spilger, Elias Arnold, Luca Blessing, Christian Mauch, Christian Pehle, Eric Müller, Johannes Schemmel | 2023 Neuro-Inspired Computational Elements Conference (NICE) |

| May 2022 | A Scalable Approach to Modeling on Accelerated Neuromorphic Hardware | Eric Müller, Elias Arnold, Oliver Breitwieser, Milena Czierlinski, Arne Emmel, Jakob Kaiser, Christian Mauch, Sebastian Schmitt, Philipp Spilger, Raphael Stock, Yannik Stradmann, Johannes Weis, Andreas Baumbach, Sebastian Billaudelle, Benjamin Cramer, Falk Ebert, Julian Göltz, Joscha Ilmberger, Vitali Karasenko, Mitja Kleider, Aron Leibfried, Christian Pehle, Johannes Schemmel | Frontiers in Neuroscience (Neuromorphic Engineering) |

| February 2022 | The BrainScaleS-2 accelerated neuromorphic system with hybrid plasticity | Christian Pehle, Sebastian Billaudelle, Benjamin Cramer, Jakob Kaiser, Korbinian Schreiber, Yannik Stradmann, Johannes Weis, Aron Leibfried, Eric Müller, Johannes Schemmel | Frontiers in Neuroscience (Neuromorphic Engineering) |

| January 2021 | hxtorch: PyTorch for BrainScaleS-2 — Perceptrons on Analog Neuromorphic Hardware | Philipp Spilger, Eric Müller, Arne Emmel, Aron Leibfried, Christian Mauch, Christian Pehle, Johannes Weis, Oliver Breitwieser, Sebastian Billaudelle, Sebastian Schmitt, Timo C. Wunderlich, Yannik Stradmann, Johannes Schemmel | 2020 International Workshop on IoT, Edge, and Mobile for Embedded Machine Learning (ITEM) |

Help Us Improve this Guide

Our hardware guide is community-maintained. If you know of a chip we should add, see an error, or have updated information, please let us know by opening an issue on our GitHub repository.