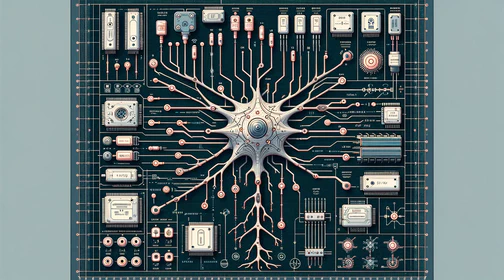

Tianjic supports both spiking and non-spiking models. Its motivation is to enable hybrid networks that blend biological plausibility from neuroscience with predictive accuracy from deep learning.

Developed By:

Tianjic is a unified neural network chip architecture proposed in 2019 that aims to efficiently support both spiking neural networks (SNNs) from the neuromorphic computing field and artificial neural networks (ANNs) commonly used in deep learning. It was unveiled by a team of researchers from Tsinghua University in Beijing, led by Professor Luping Shi.

The motivation behind Tianjic was to create a single chip architecture that could run models from both the SNN and ANN paradigms in order to better explore potential synergies between neuroscience and machine learning research. Prior specialized hardware platforms for SNNs and ANNs relied on very distinct compute, memory, and communication designs making it challenging to examine hybrid approaches that combine biological plausibility and predictive accuracy.

The Tianjic architecture consists of five key building blocks - a hybrid activation buffer, local synapse memory, shared integration engine, nonlinear transformation unit, and network connectors. It supports six core vector/matrix operations used across neuromorphic and deep learning models as well as several neuronal transformation functions. Multiple optimizations are incorporated including near-memory computing, input data sharing, computational skipping of zero activations/weights, and inter-group pipelining to improve performance and efficiency.

The architecture enables flexible homogeneous networks of either SNNs or ANNs as well as heterogeneous networks that combine both spiking and non-spiking elements, referred to as a hybrid paradigm. The neurons can be independently configured to receive spiking or non-spiking inputs and produce spiking or non-spiking outputs. The routing infrastructure seamlessly propagates both spike and activation events.

A proof-of-concept 28nm prototype chip was fabricated and achieved over 610GB/s internal memory bandwidth. In evaluations against GPUs and other specialized neuromorphic and deep learning accelerators using a range of SNN and ANN benchmarks, Tianjic demonstrated significant gains in throughput and power efficiency. Two small examples also highlighted the potential of the hybrid paradigm - controlling a bicycle robot with a mix of SNN and ANN networks and improving SNN scaling through partial integration in higher precision ANN format.

The researchers believe the unified architecture of Tianjic opens up new research directions into hybrid neural networks that blend aspects of neuroscience and machine learning models. The chip aims to enable exploration of more biologically plausible yet highly accurate networks for artificial intelligence.

Related publications

| Date | Title | Authors | Venue/Source |

|---|---|---|---|

| February 2020 | Tianjic: A Unified and Scalable Chip Bridging Spike-Based and Continuous Neural Computation | Lei Deng; Guanrui Wang; Guoqi Li; Shuangchen Li; Ling Liang; Maohua Zhu; Yujie Wu; Zheyu Yang; Zhe Zou; Jing Pei; Zhenzhi Wu; Xing Hu; Yufei Ding; Wei He; Yuan Xie; Luping Shi | IEEE Journal of Solid-State Circuits |

Help Us Improve this Guide

Our hardware guide is community-maintained. If you know of a chip we should add, see an error, or have updated information, please let us know by opening an issue on our GitHub repository.